Heya! I am a Research Scientist at Google DeepMind based in London UK working with the Gemini reasoning and code teams and was previously part of the post-training team.

I received my Ph.D. from the Department of Computer Science and Operations Research at University of Montreal where I was part of Mila - Quebec AI Institute advised by Christopher Pal. My thesis is focused on investigating the effects of self-play in emergent languages that arise in multi-agent communication systems.

I was fortunate to do research internships at: Google DeepMind in London advised by Grzegorz Swirszcz, Wojciech Czarnecki, and Oriol Vinyals on opponent behavior modeling with offline AlphaStar; Meta AI in Seattle advised by Madian Khabsa and Roberta Raileanu on improving generalization in RL using uncertainty estimates; Microsoft Research NYC advised by Jordan Ash on replacing PPO with weighted SFT in RLHF; Labs team in Google Research at Mountain View advised by Navneet Potti on enhancing code correctness in LLMs through RL with execution feedback; Microsoft Research Cambridge working with Raluca Georgescu, Sam Devlin, and Katja Hofmann on learning human-like behavior using offline RL and imitation learning.

I received my Master's degree from Courant Institute of Mathematical Sciences at New York University. I was part of the CILVR Lab where I worked with: Kyunghyun Cho (also at Prescient Design - Genetech) on analyzing compositionality in emergent languages; Jason Weston on creating a unified model for QA/VQA tasks. I also interned at Adobe Research in San Jose advised by Scott Cohen and Brian Price on building an intelligent vision-language assistant. During my undergrad, I spent some time at the Language Technologies Institute in Carnegie Mellon University advised by Florian Metze (also at Meta AI) on context-aware speech recognition. I also spent a semester at the School of Computing in National University of Singapore working with Khe Chai Sim (now at Google DeepMind) on visualizing activations of a live recorded audio using Kaldi.

Affiliations

2024 -

2018 - 2024

2016 - 2018

2011 - 2016

Internships

Fall 2023 - Spring 2024

Fall 2022

Fall 2021, Spring 2023, Summer 2023

Spring - Summer 2021

Fall 2019

Summer 2017

Spring - Summer 2016

Fall 2015

Publications

Abhinav Gupta

Ph.D. Thesis, University of Montreal, 2024

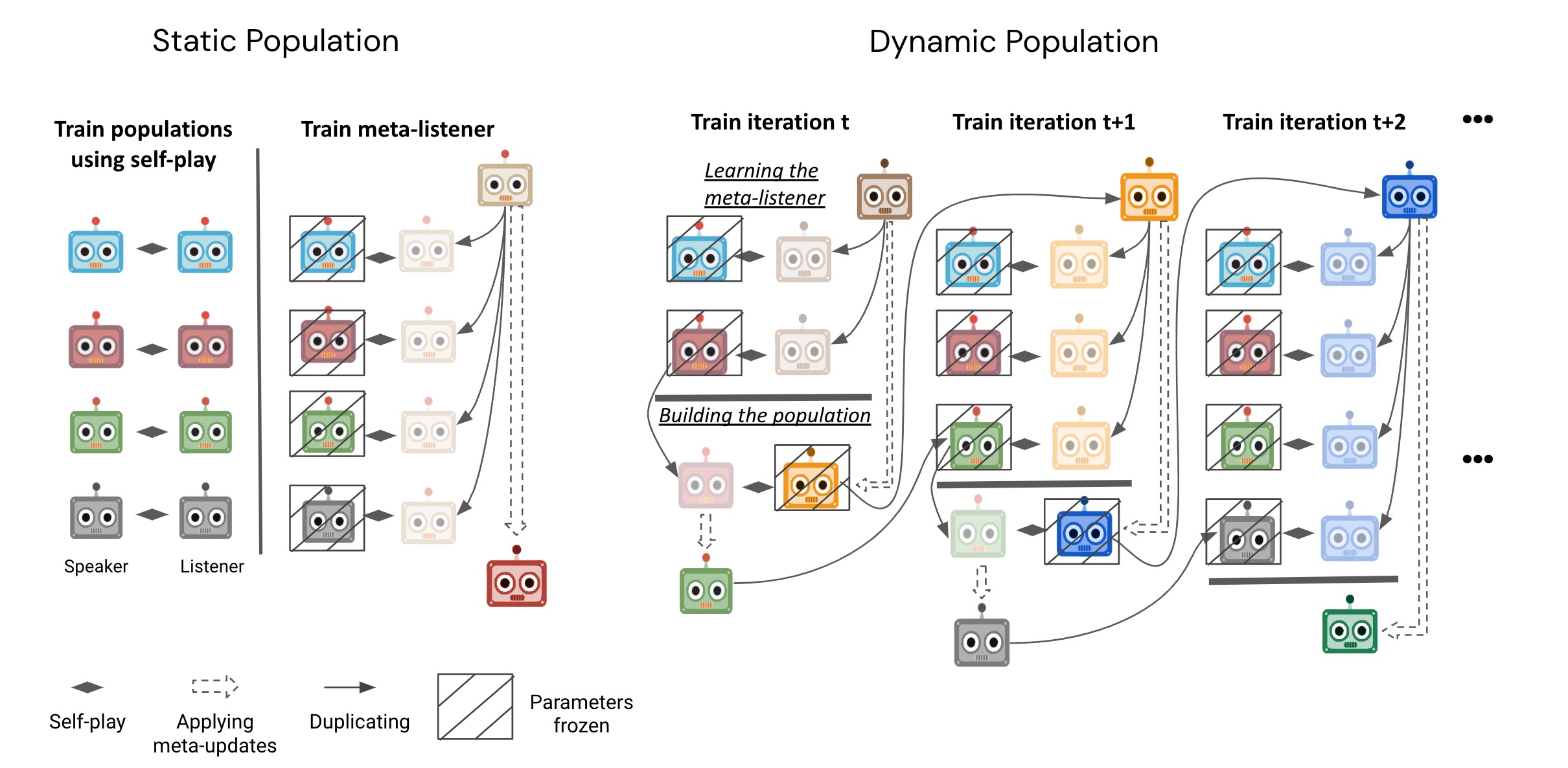

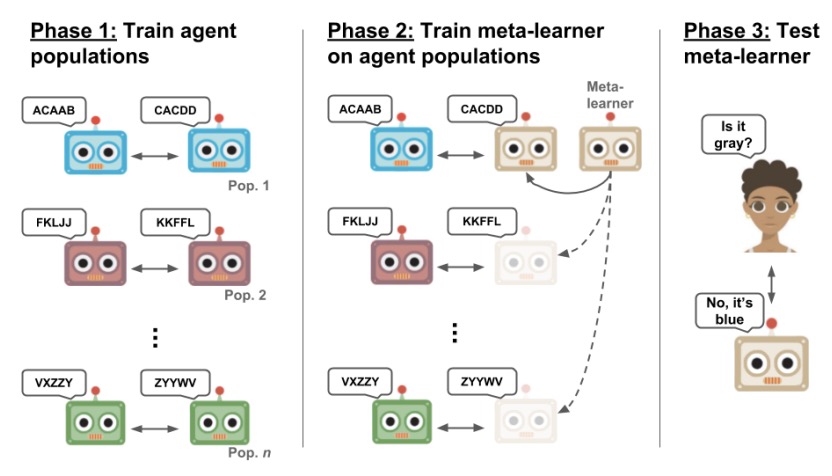

Abhinav Gupta, Marc Lanctot, Angeliki Lazaridou

Neural Information Processing Systems (NeurIPS), 2021

Learning to Learn Workshop (ICLR), 2021

Talk / Slides / Twitter / Workshop / Poster

By adopting an iterative mechanism of distillation

and expansion, we can obtain a diverse population of

agents capable of performing few-shot coordination

with unseen partners.

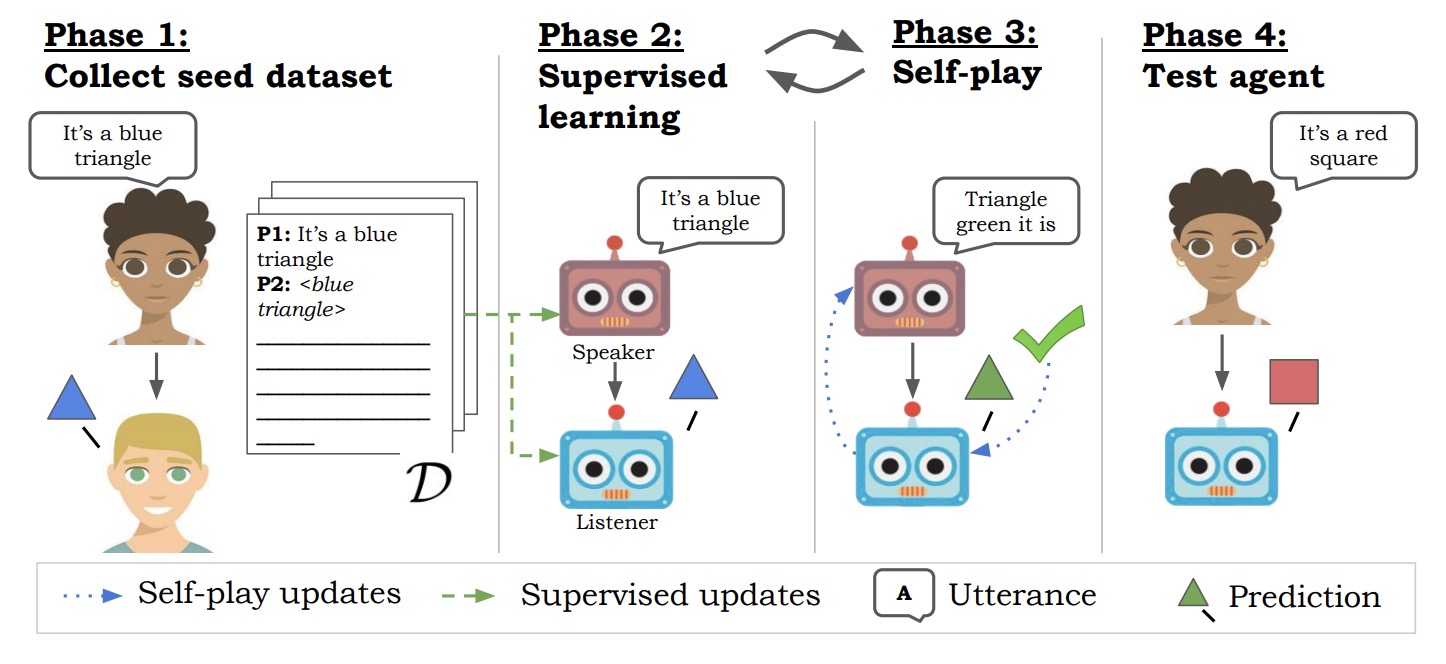

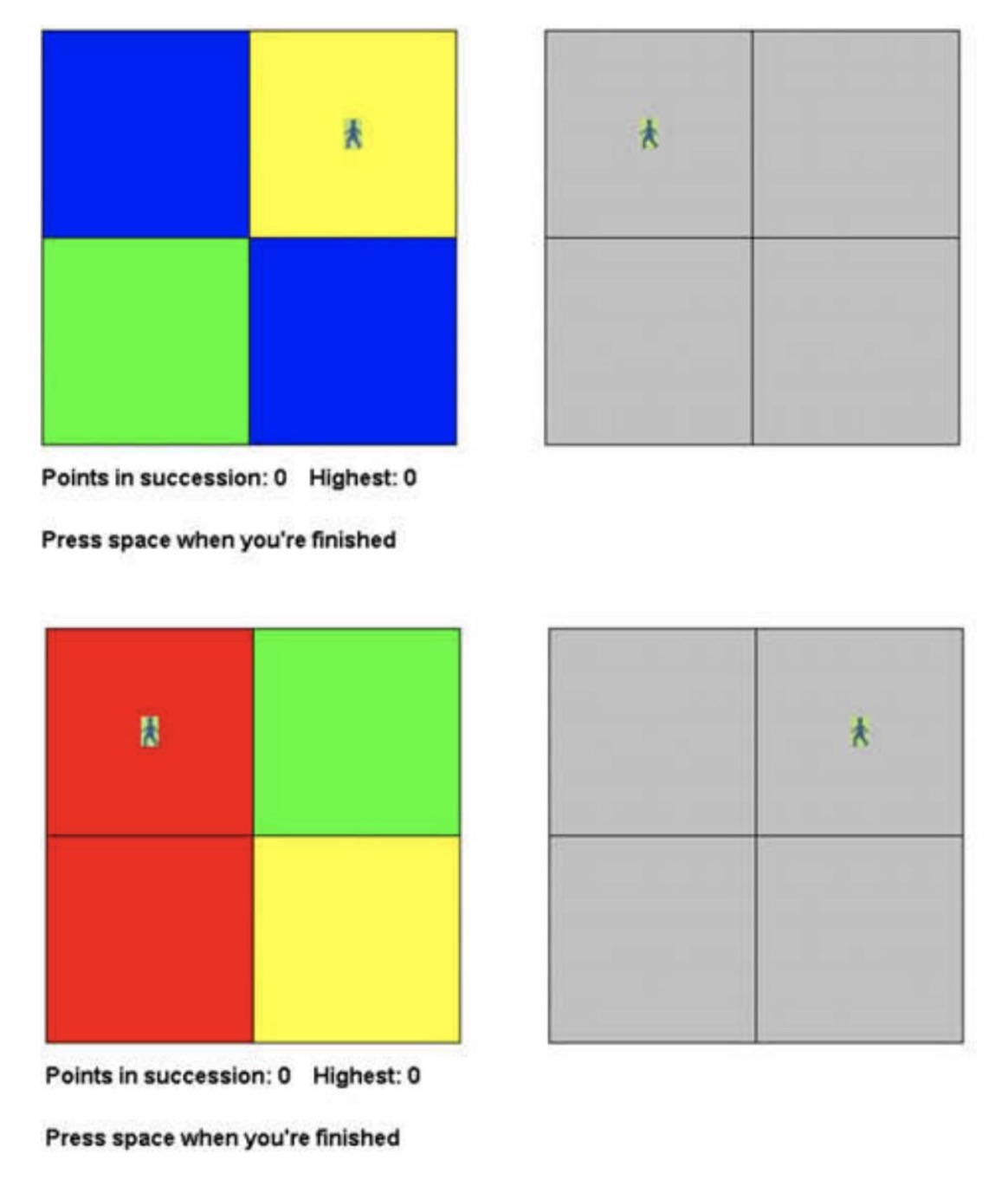

Ryan Lowe*, Abhinav Gupta*, Jakob Foerster, Douwe Kiela, Joelle Pineau (*equal contribution)

International Conference on Learning Representations (ICLR), 2020

Beyond Vision and LANguage: inTEgrating Real-world kNowledge - LANTERN Workshop (EMNLP) 2019

Talk / Slides / Twitter / GitHub / Workshop

Training agents via supervised learning on human

data followed by self-play outperforms the converse,

suggesting that it is not beneficial to emerge

languages from scratch.

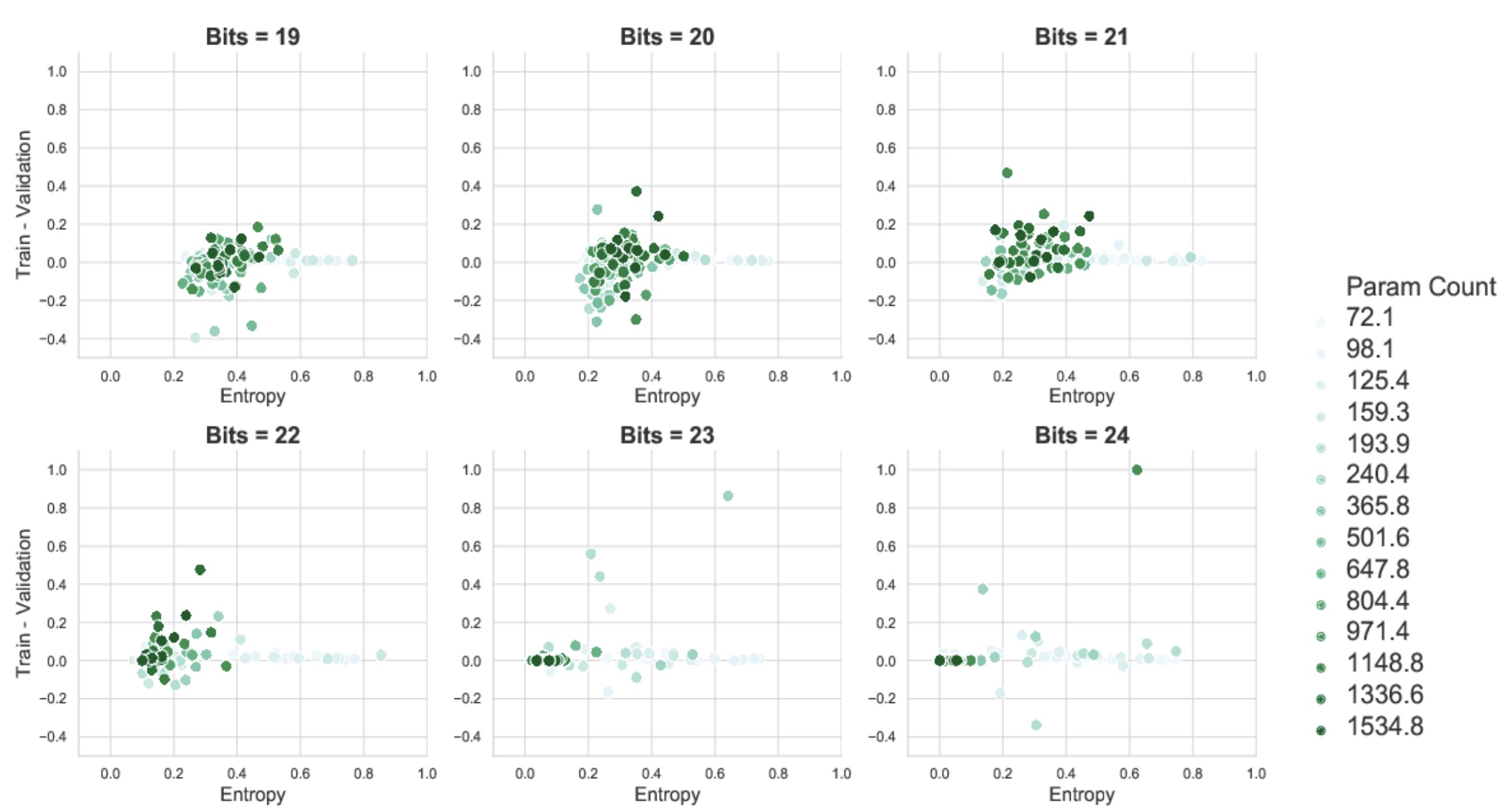

Cinjon Resnick*, Abhinav Gupta*, Jakob Foerster, Andrew M. Dai, Kyunghyun Cho (*equal contribution)

International Conference on Autonomous Agents and Multiagent Systems (AAMAS), 2020

Representation Learning for NLP - Repl4NLP Workshop (ACL), 2020

Talk / Slides / Twitter / GitHub / Workshop

Investigate relationship between model capacity and

channel bandwidth that induces compositional

structure in the resulting language and consequently

encourages systematic generalization.

Abhinav Gupta*, Ryan Lowe*, Jakob Foerster, Douwe Kiela, Joelle Pineau (*equal contribution)

Multi-disciplinary Conference on Reinforcement Learning and Decision Making (RLDM), 2019

(Oral Presentation)

Adaptive and Multitask Learning - AMTL Workshop (ICML), 2019

Train a meta-learning agent in simulation to

interact with populations of pre-trained agents and

then deploy in unseen human populations to learn

human language.

Edward Hughes, Abhinav Gupta, Ekaterina (Kate) Tolstaya, Thom Scott-Phillips

Behavioral and Brain Sciences, Volume 46, 2023

Machine Learning and the Evolution of Language - ml4evolang Workshop (JCoLE), 2022

(Oral Presentation)

Identify a minimal set of socio-cognitive biases,

derived from human behavior, that help AI agents

learn about pragmatic reasoning and ostension using

an inverse model to interpret its partner's

communicative intent in an online manner.

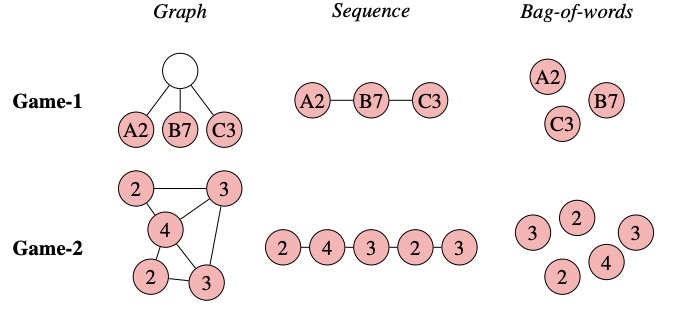

Agnieszka Słowik*, Abhinav Gupta*, William L. Hamilton, Mateja Jamnik, Sean Holden, Christopher Pal (*equal contribution)

Annual Meeting of the Cognitive Science Society - Member Abstract (CogSci), 2022

Adaptive and Learning Agents - ALA Workshop (AAMAS), 2020 (Short Talk)

Reinforcement Learning in Games - RLG Workshop (AAAI), 2020

Talk / Poster / AAMAS Workshop / AAAI Workshop / RLG Poster

Agents parametrized by graph neural networks develop

a more compositional language compared to

bag-of-words and sequence models.

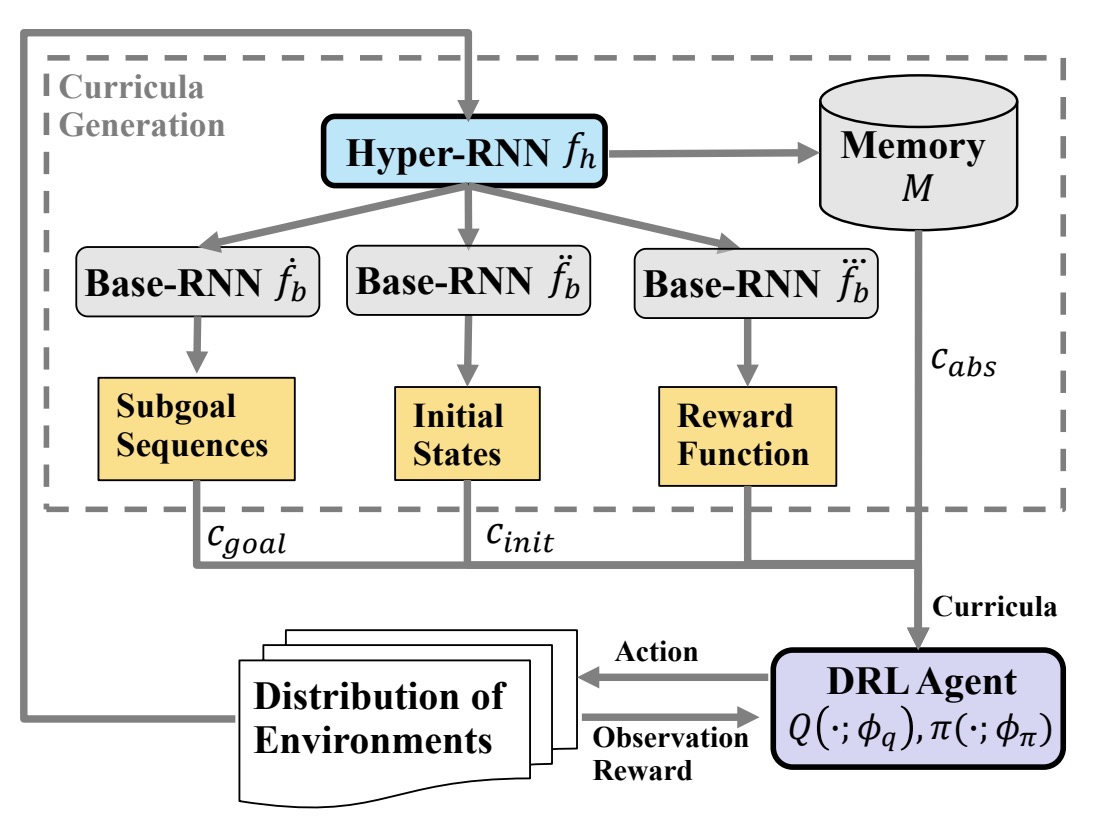

Jikun Kang, Miao Liu, Abhinav Gupta, Christopher Pal, Xue (Steve) Liu, Jie Fu

Conference on Robot Learning (CoRL), 2022

Introduce a unified automatic curriculum learning

framework to create a multi-objective but coherent

curricula for improving sample efficiency using a

shared hyper-network parameterized with a RNN.

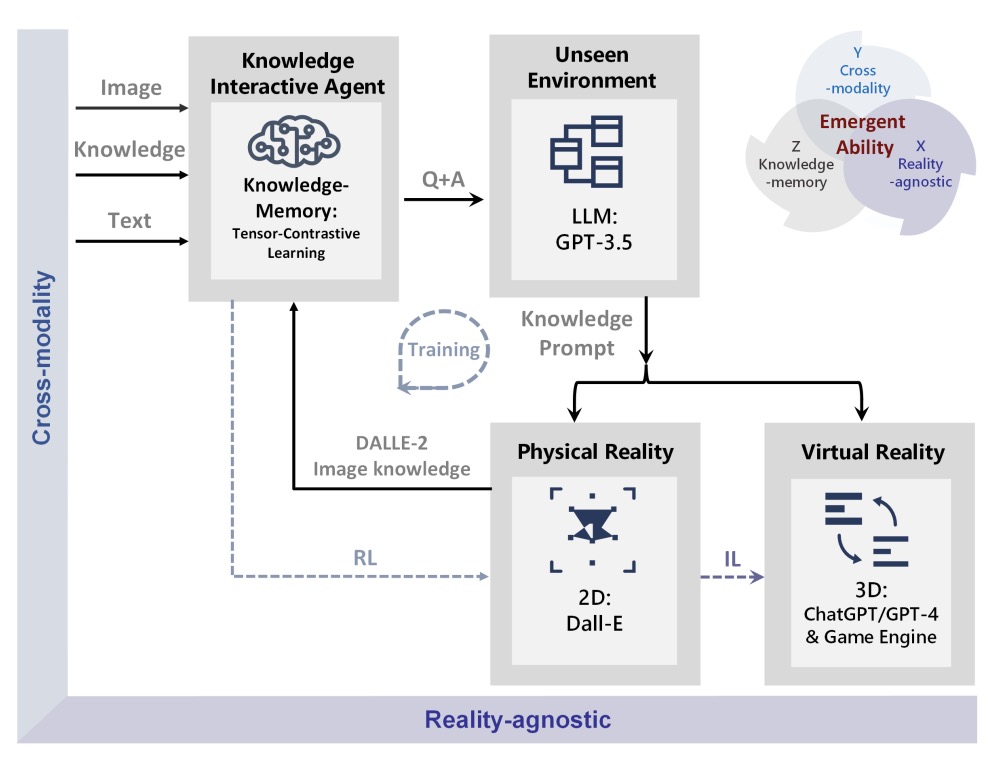

Qiuyuan Huang*, Jae Sung Park*, Abhinav Gupta*, Paul Bennett, Ran Gong, Subhojit Som, Baolin Peng, Owais Khan Mohammed, Christopher Pal, Yejin Choi, Jianfeng Gao (*equal contribution)

arXiv preprint, 2023

Infusing explicit knowledge for factuality and

commonsense reasoning using external knowledge bases

(RAG) helps in obtaining enhanced prompts to improve

cross-modality performance e.g., for image

generation tasks using DALLE-2.

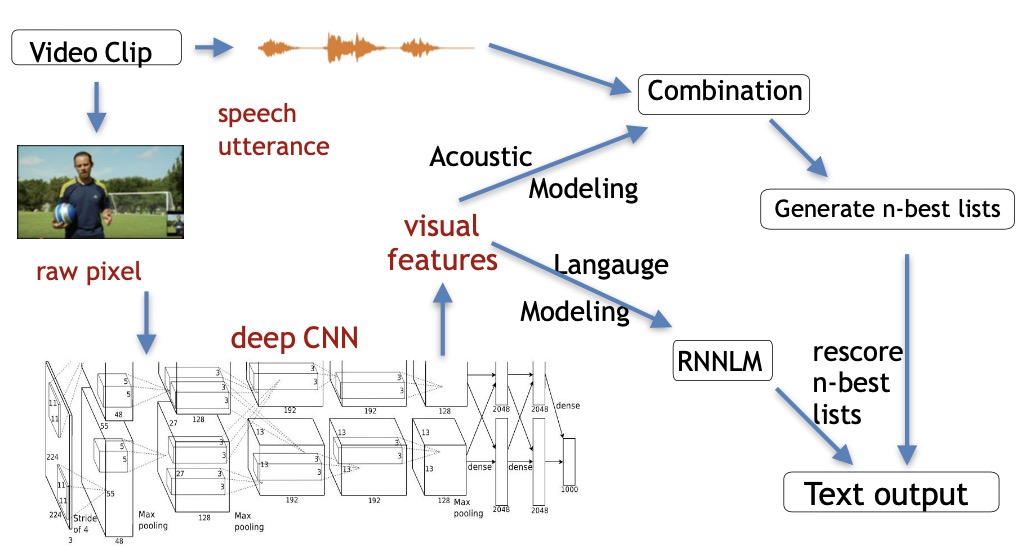

Abhinav Gupta, Yajie Miao, Leonardo Neves, Florian Metze

IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), 2017

(Best Student Paper Nominee)

Adapting acoustic and language models to objects and

scenes in context-aware Automatic Speech Recognition

for video transcription.

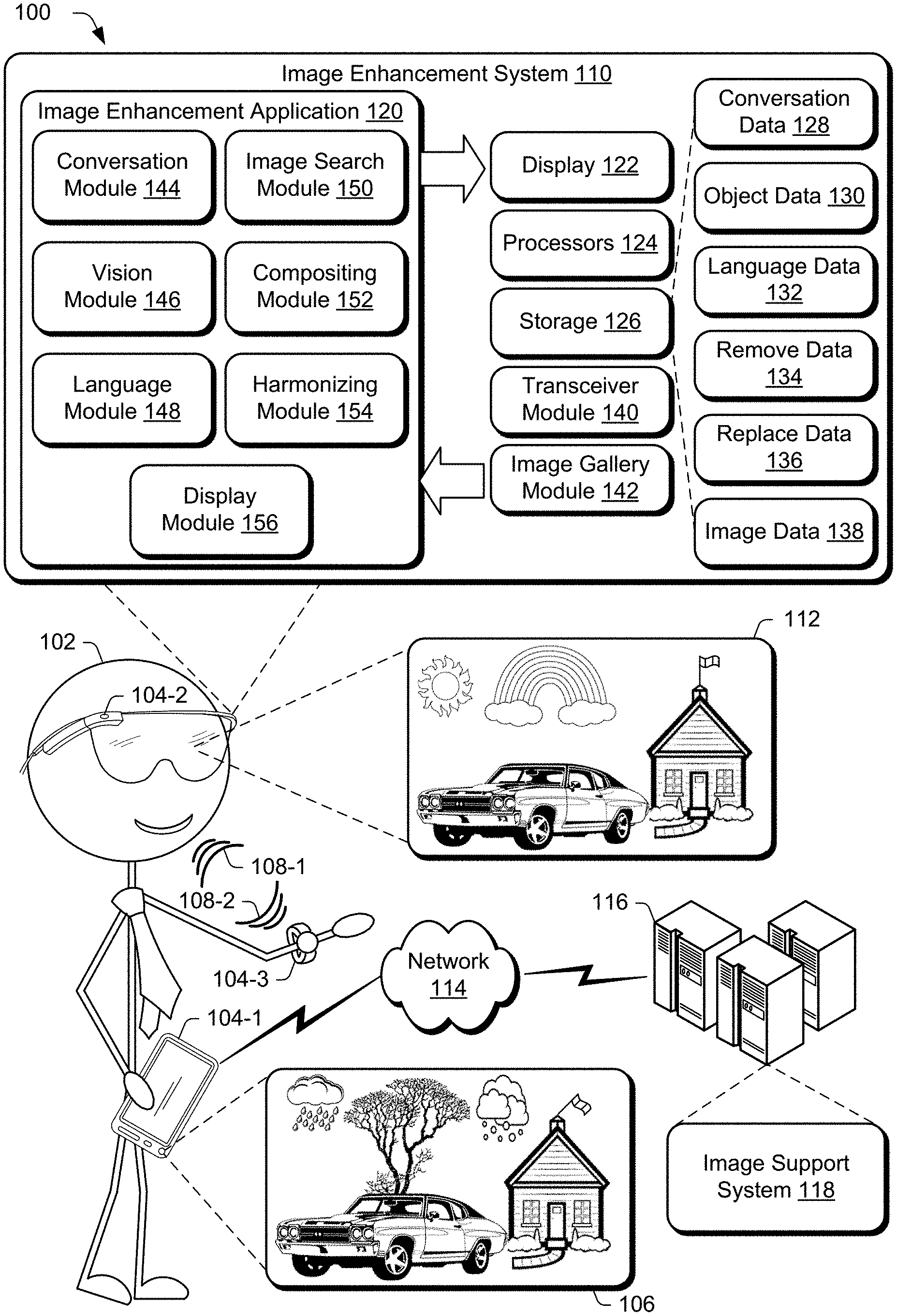

Scott Cohen, Brian Price, Abhinav Gupta

US patent, US10613726B2 (active)

China patent, CN109960453B (active)

UK patent, GB2569847B (active)

Australia patent, AU2018247342B2 (active)

Germany patent, DE102018007937A1 (pending)

Built Vera: Vision-enabled replacement assistant for

directing a user conversation to obtain an edit

query, and removing and replacing objects in an

image based on the edit query.